GoTo AI Trust Center

Last modified March 3, 2025

Introduction

GoTo empowers customers with tools to help them increase their productivity and efficiency by integrating artificial intelligence (AI) services into our business communication, collaboration, remote support, and IT management products ("GoTo Services").

We use Large Language Models (LLMs) and foundational AI models to support a growing number of use cases across our GoTo Services, such as interactive AI-based assistants, IT troubleshooting suggestions, IT management script generation and analysis, summarizing meetings and calls, and providing operational business insights.

While AI offers significant benefits, it is also a rapidly evolving technology with potential risks. On this page, we explain GoTo’s approach to secure development practices designed to manage these risks and help our customers benefit from AI-based features and functionality.

Privacy and security are top-of-mind when GoTo designs any AI feature. For more information about GoTo’s Privacy and Security programs, please visit our Trust Center.

Responsible Use of AI at GoTo

GoTo is committed to responsibly using artificial intelligence to provide product features that promote efficiency while protecting against unethical or otherwise harmful outcomes. As such, our AI-enabled product features are designed with the principles of responsible AI in mind:

- Fairness. Protections against output that is biased or discriminatory, including minimizing the use of data inputs that can lead to discriminatory model behavior.

- Transparency. Customers and end users know they are interacting with AI and using AI-enabled features. GoTo explains how and where AI is incorporated into products and how it works.

- Accountability. Oversight and auditing mechanisms are implemented to identify unexpected or harmful output. Features are designed to enable human oversight and control in a way that is commensurate to the risks and nature of the AI-enabled feature.

- Privacy and Security. GoTo implements robust system designs with regular testing and monitoring to protect data. Privacy by design is incorporated into the design of AI-enabled features, which are designed to process only the minimum amount of data needed to fulfill their purpose.

- Ethical Use. AI is used for positive and not harmful purposes. Controls are in place to protect against AI hallucinations, misinformation, inaccuracies, human rights violations, and other potentially harmful outputs.

Guiding Principle: Our AI Trust Model

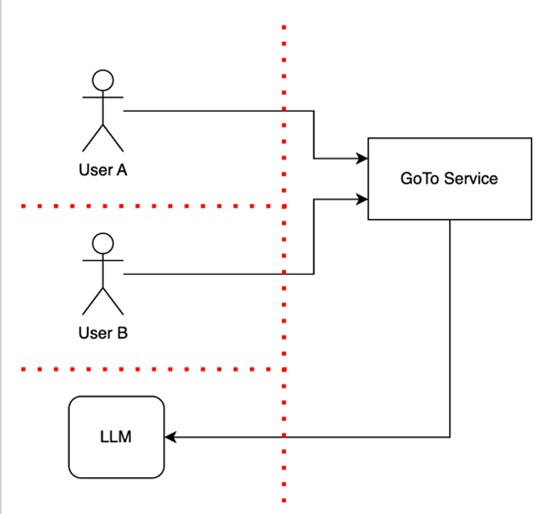

GoTo’s approach to AI feature design involves implementing trust barriers to separate users’ data from each other as well as from the product infrastructure. This trust model considers that a trusted infrastructure cannot include either humans or AI models in the same trust enclave and is demonstrated in the diagram below.

The diagram shows two users, User A and User B, using a GoTo Service that includes an LLM-based feature, indicated by the arrow to an LLM. The red line represents trust barriers that are secured to protect sensitive information and functionality, and to separate users from each other and from the product infrastructure, including the LLM. These trust barriers allow for trust checks (e.g. authentication, authorization and sanity checks) whenever control or data flows cross them.

As this diagram shows, User B does not have access to data or devices belonging to User A.

This trust model helps protect Customer data from unauthorized access or use, and helps protect GoTo’s Services from attacks or vulnerabilities.

Safeguard Mechanisms

When designing LLM applications or LLM-based features, GoTo stays informed about LLM-specific and other AI-related risks, such as prompt injection, training data poisoning and supply chain vulnerabilities, and implements safeguards to address them. With GoTo’s trust model and general AI-related risks in mind, we utilize multiple mechanisms that are detailed below, such as human in control and multi-tenancy, which are designed to help our users benefit from AI applications and features in a way that promotes data security.

While AI models provide valuable mechanisms to automate tasks, generate answers to queries, and produce various artifacts, it’s important for users to remember that these models differ from traditional software services in that they can produce incorrect answers or unintended output. When using AI-based applications, the trust you place in outputs from AI models (including LLMs) should be similar to your level of trust in outputs created by other users. In short, trust but verify: you should always verify the completeness and accuracy of AI outputs in response to your user prompts.

With that in mind, the following safeguard mechanisms are implemented where appropriate when AI is incorporated into GoTo Services:

- Human in Control. AI-enabled features are designed to give the user the last word on whether and how the output generated by an AI model (such as an LLM) is used. Users using products stay in control, and sensitive actions require human approval.

- Multi-Tenancy with LLM. GoTo ensures that individual users have separate sessions (conversations) with the LLM service, which are not accessible by other users. This means other users cannot access sensitive information shared inadvertently in a prompt.

- Customer Data is Not Used by Third Parties for Training their LLM Service. When providing AI-enabled features through a third-party provider, third-party providers are prohibited from using GoTo customers’ data for training purposes.

- Input and Output Validation. GoTo’s Services validate the format of inputs using common validation techniques, including regular expressions and parsers. Our Services reject certain types of unexpected inputs and outputs based on validation results to reduce security risks, prevent data loss or corruption, and minimize errors. For example, we validate for unexpected inputs and outputs that appear to be text injections, irrelevant prompts, corrupted data, or unforced error cases.

Transparency: Use of AI Features

We strive to clearly identify features that are AI-enabled in GoTo Services. For example, we identify features that leverage AI textually or with a sparkle icon, as shown below. Our customers can choose whether they want to use the feature or the resulting output provided. In some instances, customers or users can choose to disable AI-enabled features that they do not want to use. Please refer to product-specific support pages for more information.

Examples of AI feature identification:

Transparency: Data Use

In limited cases, and only where customers are given a choice concerning this use, GoTo may use customer data to train GoTo’s AI models and help improve a product’s overall output quality. For customers who choose to allow this data use, GoTo takes steps to process only the information that is needed, limiting LLM requests to essential contextual details, as further explained below in the section “Data Minimization.” As an example of this approach, please see the LogMeIn Resolve User Content Usage – FAQ.

We provide further information about the use of AI-enabled features in GoTo products in the GoTo AI Terms, which apply to a customer’s use of these features.

Data Minimization

GoTo seeks to limit LLM requests to essential contextual details. For instance, if a customer would like to include contextual details in an input for an LLM to improve accuracy, GoTo Services seek to only leverage information that is relevant in context using a technique called Retrieval Augmented Generation (RAG). For example, when diagnosing a device in LogMeIn Resolve or Rescue, we include device data such as CPU and memory but avoid any personal data about the device users.

Least Privilege and Feature-based Risk

When designing GoTo Services, including AI-enabled features, we follow a risk-based approach and the principle of least privilege, which means that an AI service only has access to the specific data or resources necessary (i.e., we technically restrict data access, for example using technical roles with narrow access privileges).

Before deciding to implement an AI-enabled feature, we consider feature-based risks. To restrict access appropriately, we limit the privileges and data that an AI service can access to only what is necessary for the feature to function.